Even Excel has its cell limitations

MS Excel can display 1,048,576 rows. While that may seem like a really large number in normal usage, there are plenty of scenarios where that isn’t quite enough.

Whether you’re looking at log files or large data sets, it’s easy to come across CSV files with millions of rows or enormous text files. Since Excel cannot support files this large, how exactly do you open them? Let’s find out.

Why Can Normal Text Editors Not Open Really Large Files?

A computer has gigabytes of storage, so why can’t text editors open large files?

There are two factors at play here. Some applications have a hardcoded limit on how much data they can display. It doesn’t matter how much memory your PC has, they just won’t use it.

The second issue is RAM. Many text editors do not have a hard limit on the number of rows, but cannot display large files due to memory limitations. They load the entire file into the system RAM, so if this memory isn’t large enough, the process fails.

Method #1: Using Free Editors

The best way to view extremely large text files is to use… a text editor. Not just any text editor, but the tools meant for writing code. Such apps can usually handle large files without a hitch and are free.

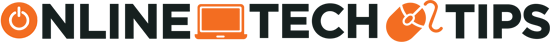

Large Text File Viewer is probably the simplest of these applications. It’s really easy to use, works fast and has a very low resource footprint. The only downside? It cannot edit the files. But if you only want to view large CSV files, this is hands down the best tool for the job.

For editing large text files as well, you should try Emacs. Originally created for Unix systems, it works perfectly well on Windows as well, and can handle large files. Similarly, Neovim and Sublime Text are two lightweight IDEs that can be used to open gigabyte-sized CSV text files.

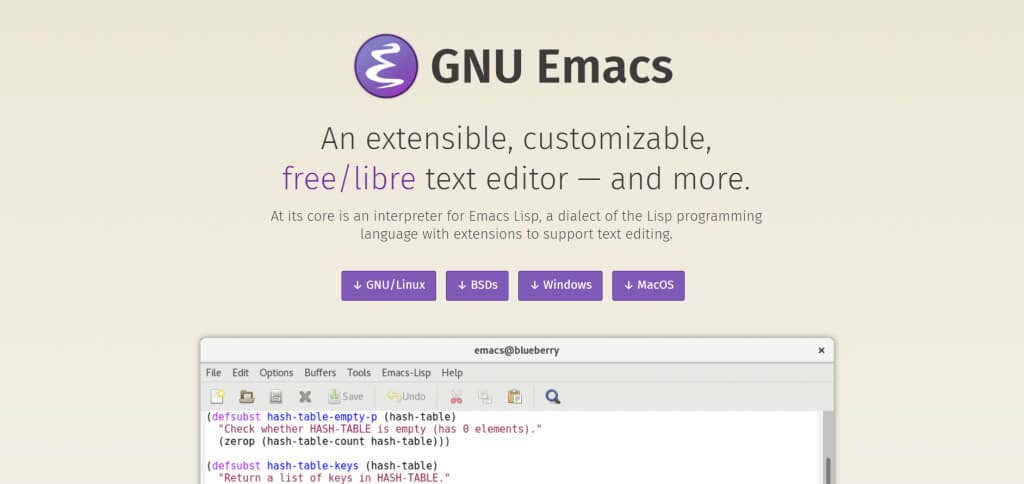

If all you’re looking for is to search for data through large log files, then klogg is just the tool for you. An updating fork of the popular glogg, this application allows you to perform complex search operations through enormous text files with ease. Since computer-generated log files can often have millions of rows, klogg is designed to work with such file sizes without an issue.

Method #2: Split Into Multiple Parts

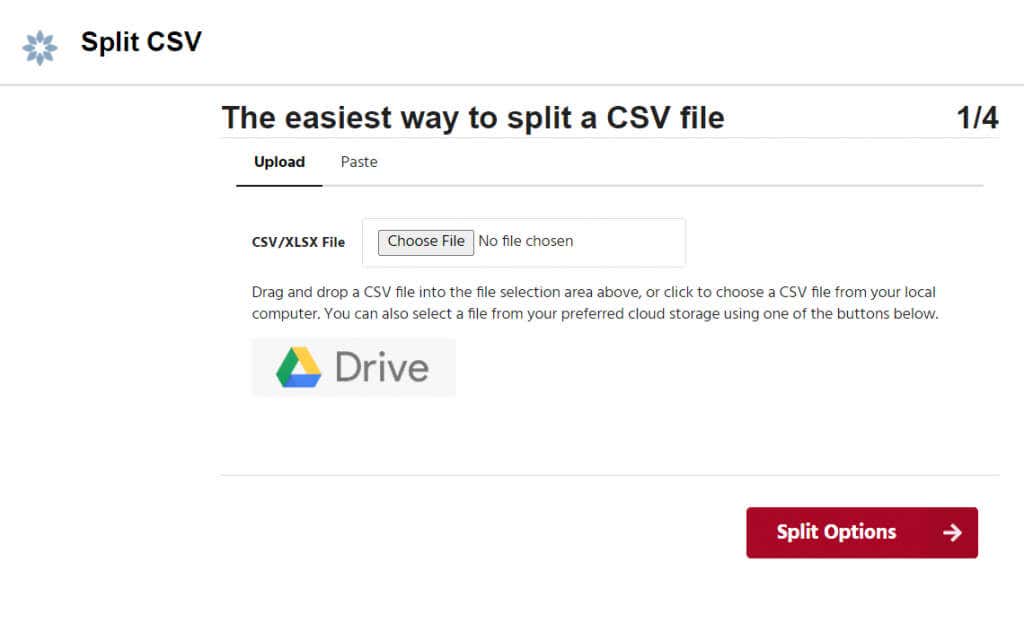

The whole problem with trying to open large CSV files is that they are too large. But what if you were to split these into multiple smaller files?

This is a popular solution, as it generally doesn’t involve having to learn the interface of a new text editor. Instead, you can use one of the many CSV splitters available online to break up the large file into a number of easy-to-open files. Each of these files can then be accessed normally.

However, this isn’t the best way to go about this. Splitting a large file can often lead to weird typos or improperly configured files. Moreover, opening each chunk separately prevents you from filtering through the whole data at once.

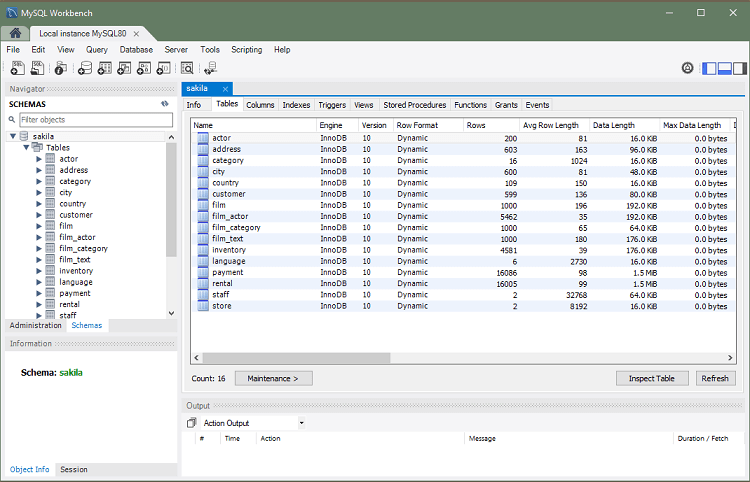

Method #3: Import Into a Database

Text and .csv files extending to multiple gigabytes are generally large datasets. So why not just import them into a database?

SQL is the most common database markup language used these days. There are many versions of SQL in use, but the easiest is probably MySQL. And as luck would have it, it is possible to convert a CSV file into a MySQL table.

This is by no means the easiest method of dealing with large CSV files, so we only recommend this if you want to deal with large datasets on a regular basis. If MySQL sounds too tough, you can always import your .csv files to MS Access instead.

Method #4: Analyze With Python Libraries

When you’re working with a .csv file with millions of rows of data, you’re obviously not going to be able to make much sense of it manually. You probably want to filter the data and run specific queries to understand trends.

So why not write Python code to do just that?

Once again, this is not the most user-friendly method. While Python isn’t the hardest programming language to learn, it is coding, so it might not be the best approach for you. Still, if you find yourself having to parse through really large CSV files on a daily basis, you might want to automate the task with some Python code.

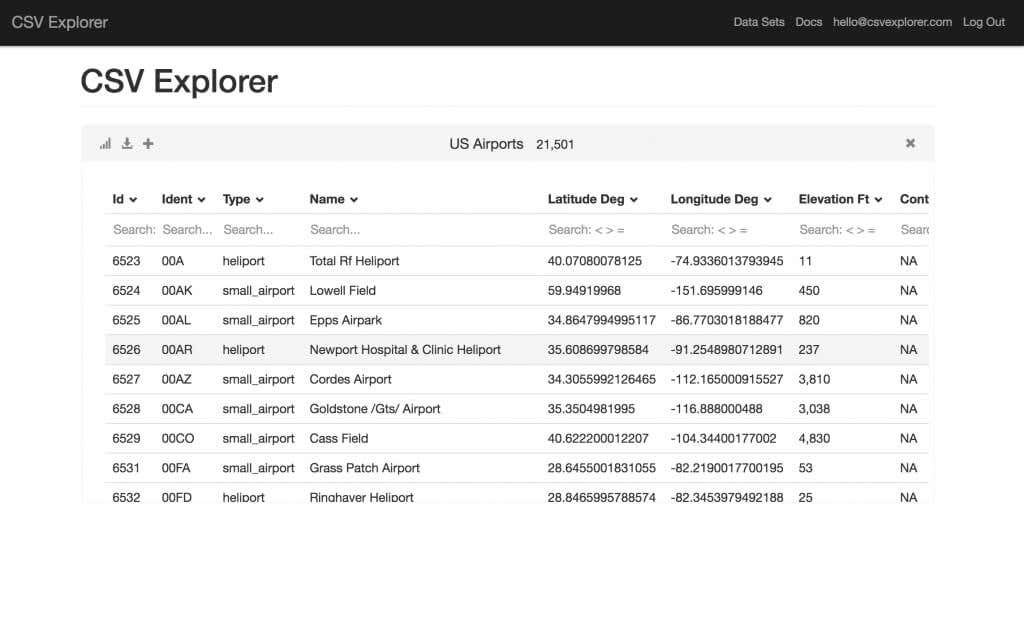

Method #5: With Premium Tools

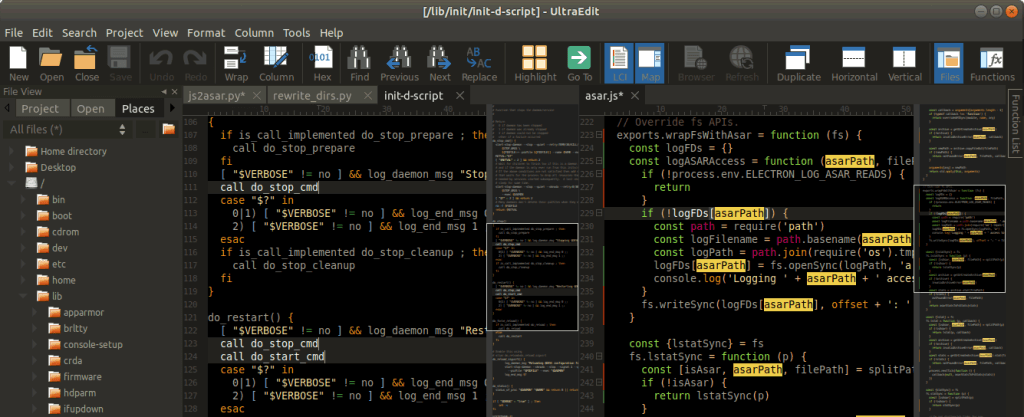

The text editors we saw in the first method weren’t dedicated tools meant for CSV processing. They were general-purpose tools that could be used to work with large .csv files as well.

But what about specialized applications? Are there no apps out there that are created to solve this problem?

There are, actually. CSV Explorer, for example, builds on the very process we described in the last two methods (SQL database and Python code) to create an app capable of viewing and editing CSV files of any size. You can do everything you expect from a spreadsheet tool like create graphs or filter the data in CSV Explorer.

Another option is UltraEdit. Unlike the previous tool, this is meant not just for .csv files but for any type of text file. It can easily handle text and CSV files ranging to a few gigabytes, with an interface similar to many of the free editors we discussed earlier.

The only drawback with these tools is that they are premium applications, requiring you to get a paid license to be able to use them. You can always try out their free trial versions to check out their features, or if you only have a one-time use.

What Is the Best Way To Open Large Text and CSV Files?

In this age of Big Data, it’s not uncommon to run into text files running into gigabytes, which can be hard to even view with built-in tools like Notepad or MS Excel. To be able to open such large CSV files, you need to download and use a third-party application.

If all you want is to view such files, then Large Text File Viewer is the best choice for you. For actually editing them, you can try a feature-rich text editor like Emacs, or go for a premium tool like CSV Explorer.

Techniques like splitting the CSV file or importing it into a database involve just too many steps. You’re better off getting a paid license of a dedicated premium tool if you find yourself working with huge text files a lot.